Spark 1.4.1

vm-007

vm-008

vm-009

一、安装Scala2.10.4

编辑 .bashrc 或者 /etc/profile 文件 如下两行

export SCALA_HOME=/opt/software/scala-2.10.4

export PATH=$SCALA_HOME/bin:$PATH

二、配置 lwj@vm-007:/opt/software/spark-1.4.1-bin-hadoop2.6/conf下的的文件

编辑 slaves

vm-007

vm-008 vm-009编辑 spark-env.sh

export JAVA_HOME=/opt/software/jdk1.7.0_80

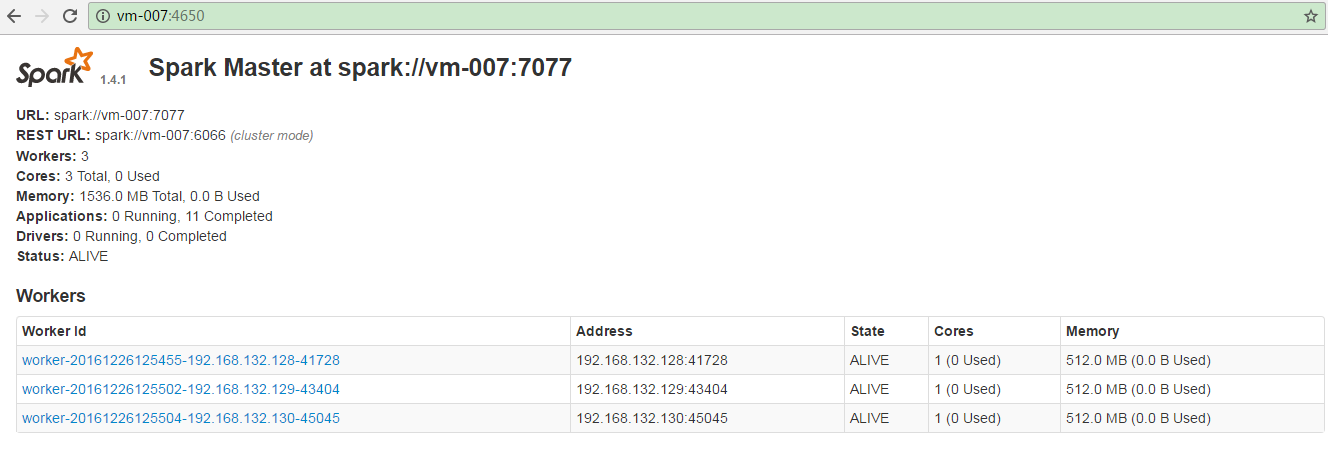

export SPARK_MASTER_IP=vm-007 export SPARK_WORKER_MEMORY=512m export SPARK_MASTER_WEBUI_PORT=4650 export MASTER=spark://vm-007:7077 export SPARK_LOCAL_DIRS=/disk/spark/local export SCALA_HOME=/opt/software/scala-2.10.4 export SPARK_HOME=/opt/software/spark-1.4.1-bin-hadoop2.6 export SPARK_PID_DIR=$SPARK_HOME/pids export SPARK_WORKER_DIR=/disk/spark/worker export SPARK_JAVA_OPTS="-server" export SPARK_TMP_DIRS=/disk/spark/temp三、启动/停止

lwj@vm-007:/opt/software/spark-1.4.1-bin-hadoop2.6$ ./sbin/start-all.sh

lwj@vm-007:/opt/software/spark-1.4.1-bin-hadoop2.6$ ./sbin/stop-all.sh

Spark Master 节点:

lwj@vm-007:/opt/software/spark-1.4.1-bin-hadoop2.6$ jps

5437 Worker 5260 Master Spark Worker 节点:lwj@vm-009:/opt/software/spark-1.4.1-bin-hadoop2.6$ jps

7277 Workerlwj@vm-008:/opt/software/spark-1.4.1-bin-hadoop2.6$ jps

7277 Worker四、监控页面

五、提交任务

./bin/spark-submit \

--class com.blueview.spark.ClusterWordCount \

--master spark://vm-07:7077 \

/opt/software/spark-1.4.1-bin-hadoop2.6/bvc-test-0.0.0.jar